Test Strategies A.K.A Test Approaches are used during the planning phase of testing along with Test plans and Estimation techniques. This factor of testing is under the control of testers and test leaders, and is one of the powerful factors in test effort and in the accuracy of the test plans and estimates. (ISTQB, pg 142). There are several major types of strategies some are more preventive some are more reactive:

Analytical: Test strategies that use some formal or informal analytical technique usually during the requirements and design stage of the project.

Model Based: These strategies tend to use the creation or selection of some formal or informal model for critical system behaviors, usually during the requirements and design stages of the project.

Methodical: These tend to rely on the adherence to a pre-planned, systematized approach that has been developed in-house, assembled from various concepts developed in- house and gathered from outside, or adapted significantly from outside ideas and may have an early or late point of involvement for testing.

Process: These strategies share a reliance upon an externally developed approach to testing, often with little or no customization and may have an early or late point of involvement for testing.

Dynamic: These strategies focus on finding as many defects as possible during test execution and adapting to the realities of the system under test as it is when delivered, and they typically emphasize the later stages of testing.

Consultative Or directed: These rely on a group of non-testers to guide or perform the testing effort and typically emphasize the later stages of testing simply due to the lack of recognition of the value of early testing.

Regression-Averse: These strategy types use a set of procedures (usually automated) that allow them to detect regression defects. A regression-averse strategy may involve automating functional tests prior to release of the function, in which case it requires early testing, but sometimes the testing is almost entirely focused on testing functions that already have been released, which is in some sense a form of post- release test involvement.

Wednesday, December 3, 2014

Tuesday, November 25, 2014

Estimation Techniques

Estimation Techniques along with Test Plans and Test Strategies are done throughout the Planning phase of testing. First there are 2 techniques that ISTQB mentions, consulting people and analyzing metrics. The consulting technique involves working with experienced staff members and testers drawing upon their collective wisdom to develop structure for testing the project. Then collaborate to understand, tasks, effort, duration, dependencies, and resource requirements that the tests will need to be successful.

Analyzing metrics can be as simple or sophisticated as the tester can make it. ISTQB states the easiest approach asks, 'How many testers do we typically have per developer on a project?'

A more reliable method classifies the project in terms of size (small, medium or large) and complexity (simple, moderate or complex) then sees the average length of projects of a particular size and complexity have taken in the past to test.

Another reliable yet easy approach that ISTQB mentions is to look at the average effort per test case in similar past projects and to use the estimated number of test cases to estimate the total effort. The more sophisticated methods that ISTQB mentions involve building mathematical models in a spreadsheet that look at historical or industry averages for certain key parameters number of tests run by tester per day, number of defects found by tester per day, etc. and then plugging in those parameters to predict duration and effort for key tasks or activities on your project. The tester-to-developer ratio is an example of a top-down estimation technique, in that the entire estimate is derived at the project level, while the parametric technique is bottom-up, at least when it is used to estimate individual tasks or activities.

ISTQB testers prefer to start by drawing on the team's wisdom to create the work-breakdown structure and a detailed bottom-up estimate. We then apply models and rules of thumb to check and adjust the estimate bottom-up and top-down using past history. This approach tends to create an estimate that is both more accurate and more defensible than either technique by itself.

Even the best estimate must be negotiated with management however. Negotiating sessions exhibit amazing variety, depending on the people involved. However, there are some classic negotiating positions. It's not unusual for the test leader or manager to try to sell the management team on the value added by the testing or to alert management to the potential problems that would result from not testing enough. It's not unusual for management to look for smart ways to accelerate the schedule or to press for equivalent coverage in less time or with fewer resources. In between these positions, you and your colleagues can reach compromise, if the parties are willing. Our experience has been that successful negotiations about estimates are those where the focus is less on winning and losing and more about figuring out how best to balance competing pressures in the realms of quality, schedule, budget and features.

Analyzing metrics can be as simple or sophisticated as the tester can make it. ISTQB states the easiest approach asks, 'How many testers do we typically have per developer on a project?'

A more reliable method classifies the project in terms of size (small, medium or large) and complexity (simple, moderate or complex) then sees the average length of projects of a particular size and complexity have taken in the past to test.

Another reliable yet easy approach that ISTQB mentions is to look at the average effort per test case in similar past projects and to use the estimated number of test cases to estimate the total effort. The more sophisticated methods that ISTQB mentions involve building mathematical models in a spreadsheet that look at historical or industry averages for certain key parameters number of tests run by tester per day, number of defects found by tester per day, etc. and then plugging in those parameters to predict duration and effort for key tasks or activities on your project. The tester-to-developer ratio is an example of a top-down estimation technique, in that the entire estimate is derived at the project level, while the parametric technique is bottom-up, at least when it is used to estimate individual tasks or activities.

ISTQB testers prefer to start by drawing on the team's wisdom to create the work-breakdown structure and a detailed bottom-up estimate. We then apply models and rules of thumb to check and adjust the estimate bottom-up and top-down using past history. This approach tends to create an estimate that is both more accurate and more defensible than either technique by itself.

Even the best estimate must be negotiated with management however. Negotiating sessions exhibit amazing variety, depending on the people involved. However, there are some classic negotiating positions. It's not unusual for the test leader or manager to try to sell the management team on the value added by the testing or to alert management to the potential problems that would result from not testing enough. It's not unusual for management to look for smart ways to accelerate the schedule or to press for equivalent coverage in less time or with fewer resources. In between these positions, you and your colleagues can reach compromise, if the parties are willing. Our experience has been that successful negotiations about estimates are those where the focus is less on winning and losing and more about figuring out how best to balance competing pressures in the realms of quality, schedule, budget and features.

Thursday, November 13, 2014

IEEE 829 template

As promised in the last post I will write about the IEEE 829 template which is the ISTQB standard template for test plans.

The book seemed kind of vague to me as to what it was about but below is what the book had on the test plan template and what should be in it.

IEEE 829 STANDARD TEST PLAN TEMPLATE

Test plan identifier Test deliverables

Introduction Test tasks

Test items Environmental needs

Features to be tested Responsibilities

Features not to be tested Staffing and training needs

Approach Schedule

Item pass/fail criteria Risks and contingencies

Suspension and resumption criteria Approvals

I might update this post when I figure out more about the IEEE 829 standard Test Plan Template.

The book seemed kind of vague to me as to what it was about but below is what the book had on the test plan template and what should be in it.

IEEE 829 STANDARD TEST PLAN TEMPLATE

Test plan identifier Test deliverables

Introduction Test tasks

Test items Environmental needs

Features to be tested Responsibilities

Features not to be tested Staffing and training needs

Approach Schedule

Item pass/fail criteria Risks and contingencies

Suspension and resumption criteria Approvals

I might update this post when I figure out more about the IEEE 829 standard Test Plan Template.

Wednesday, November 5, 2014

Test Plans

Test plans Estimates and strategies are 3 testing topics that go together and are prepared concurrently during the planning phase of testing. I will start by talking about test plans. There are many different definitions for test plan but the ISTQB book states a test plan is mainly the project plan for the testing work to be done. Test plans differ from Test Design Specification, Test Cases or a set of Test procedures in the fact that less description is used in Test Plans.

ISTQB states that there are 3 main reasons as to why testers write test plans:

1. Writing a test plan guides our thinking. ISTQB explained this reason's logic as 'if we can explain something in words, we understand it. If not, there's a good chance we don't.'

Writing a test plan forces us to confront the challenges that await us and focus our thinking on important topics.

2. The test planning process and the plan itself can be a good excuse for a tester to communicate with other members of the project team, testers, peers, managers and other stakeholders. This is an important aspect since collaboration can help improve a tester's understanding of a system and their testing overall.

3. The test plan also helps us manage change. During early phases of the project, as we gather more information, we revise our plans. As the project evolves and situations change, we adapt our plans. Written test plans give us a baseline against which to measure such revisions and changes. Furthermore, updating the plan at major milestones helps keep testing aligned with project needs. As we run the tests, we make final adjustments to our plans based on the results. You might not have the time or the energy to update your test plans

ISTQB suggests to use a template when writing test plans to help remember important challenges. ISTQB has it's own standard template called IEEE829 which I will write about in my next post.

ISTQB states that there are 3 main reasons as to why testers write test plans:

1. Writing a test plan guides our thinking. ISTQB explained this reason's logic as 'if we can explain something in words, we understand it. If not, there's a good chance we don't.'

Writing a test plan forces us to confront the challenges that await us and focus our thinking on important topics.

2. The test planning process and the plan itself can be a good excuse for a tester to communicate with other members of the project team, testers, peers, managers and other stakeholders. This is an important aspect since collaboration can help improve a tester's understanding of a system and their testing overall.

3. The test plan also helps us manage change. During early phases of the project, as we gather more information, we revise our plans. As the project evolves and situations change, we adapt our plans. Written test plans give us a baseline against which to measure such revisions and changes. Furthermore, updating the plan at major milestones helps keep testing aligned with project needs. As we run the tests, we make final adjustments to our plans based on the results. You might not have the time or the energy to update your test plans

ISTQB suggests to use a template when writing test plans to help remember important challenges. ISTQB has it's own standard template called IEEE829 which I will write about in my next post.

Wednesday, October 22, 2014

Practice Quiz Links

Here are some websites that have practice quizzes for the ISTQB certification exam.

Most of these have full practice tests meant to simulate the number of tests on the real test. There are supposed to be 40 questions on the real test and you need to get at least 65% to pass.

http://istqb.patshala.com/

This site has several full practice tests, as well as tests for the individual chapters for the ISTQB book although they are labeled as the main concepts that each chapter teaches as opposed to Chapter 1, 2, 3, 4, 5, 6.

CH 1: Fundamentals of Testing

CH 2: Testing through the software life cycle

CH 3: Static Techniques

CH 4: Test design techniques

CH 5: Test Management

CH 6: Tool support for testing

These exams don't have a time limit so there is plenty of time to take notes or look for the answers if you're not sure what the right answer is. However on the real test you won't be allowed to look for answers so you probably shouldn't do it too often for practice tests.

http://www.testingexcellence.com/istqb-quiz/istqb-foundation-practice-exam-1/

This has 2 full practice exams and a bunch of other related practice tests for stuff like testing fundamentals, management, and white box testing. The practice exams aren't timed on this site so you can take your time.

Most of these have full practice tests meant to simulate the number of tests on the real test. There are supposed to be 40 questions on the real test and you need to get at least 65% to pass.

http://istqb.patshala.com/

This site has several full practice tests, as well as tests for the individual chapters for the ISTQB book although they are labeled as the main concepts that each chapter teaches as opposed to Chapter 1, 2, 3, 4, 5, 6.

CH 1: Fundamentals of Testing

CH 2: Testing through the software life cycle

CH 3: Static Techniques

CH 4: Test design techniques

CH 5: Test Management

CH 6: Tool support for testing

These exams don't have a time limit so there is plenty of time to take notes or look for the answers if you're not sure what the right answer is. However on the real test you won't be allowed to look for answers so you probably shouldn't do it too often for practice tests.

http://www.testingexcellence.com/istqb-quiz/istqb-foundation-practice-exam-1/

This has 2 full practice exams and a bunch of other related practice tests for stuff like testing fundamentals, management, and white box testing. The practice exams aren't timed on this site so you can take your time.

Thursday, October 16, 2014

Code Metrics and Cyclomatic Complexity

Code Metrics are a tool for static testing it has a section about it in Section 3.3.2 in chapter 3 of the ISTQB book where they also briefly introduce the concept of Cyclomatic Complexity. Since questions on Cyclomatic Complexity and code metrics are found in practice tests I thought it would be good to know.

Code metrics are the measurement scales and methods used in software testing, and Cyclomatic complexity is one example of a code metric used in static testing. When performing static code analysis, code metrics can be used to calculate numerous attributes of the code such as comment frequency, depth of nesting, cyclomatic number and number of lines of code. Code metrics can not only compute these attributes but can also be done as the design and code are being created and as changes are made to a system, to see if the design or code is becoming bigger, more complex and more difficult to understand and maintain.

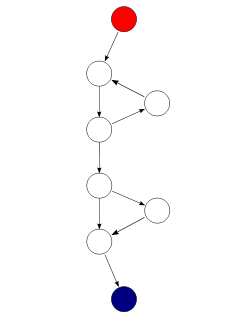

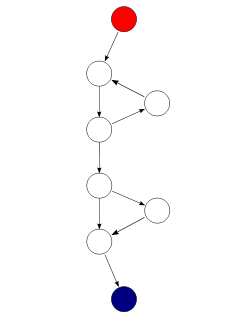

Cyclomatic complexity is a code metric used on static testing when the testers take all of the expected statements and decisions and compile them into a diagram or map of the system. It involves an equation defined by the ISTQB book as L-N+2P. L is the number of edges or lines connecting nodes, N is the number of nodes or steps in the process flow reached when decisions are made, P is the number of connected components (usually the entire process is connected and therefore this is usually equal to 1) and example of this is below.

In this example there are 9 edges or lines, 8 nodes, and the entire thing is connected meaning P=1

the cyclomatic complexity equation would look like: 9-8+2*1=3

Code metrics are the measurement scales and methods used in software testing, and Cyclomatic complexity is one example of a code metric used in static testing. When performing static code analysis, code metrics can be used to calculate numerous attributes of the code such as comment frequency, depth of nesting, cyclomatic number and number of lines of code. Code metrics can not only compute these attributes but can also be done as the design and code are being created and as changes are made to a system, to see if the design or code is becoming bigger, more complex and more difficult to understand and maintain.

Cyclomatic complexity is a code metric used on static testing when the testers take all of the expected statements and decisions and compile them into a diagram or map of the system. It involves an equation defined by the ISTQB book as L-N+2P. L is the number of edges or lines connecting nodes, N is the number of nodes or steps in the process flow reached when decisions are made, P is the number of connected components (usually the entire process is connected and therefore this is usually equal to 1) and example of this is below.

In this example there are 9 edges or lines, 8 nodes, and the entire thing is connected meaning P=1

the cyclomatic complexity equation would look like: 9-8+2*1=3

Thursday, October 9, 2014

Designing Tests

For this post I have decided to write about how to design tests. Testers design tests whether they are using Static or dynamic testing techniques. To design a test you need 3 things according to ISTQB test conditions, test cases and test procedures (Scripts). Each of these things are specified in their own documents created from information given by the developers and the tester's own interpretations of the provided info. Testing is also designed and executed with varying degrees of formality depending on the customer's or testing team's preferences.

The designing process usually begins with the testers looking through something that they can derive test info from which is also known as a test basis. A test basis can be many different things from an experienced user's knowledge, a business process, system requirements, specifications or the code. Through test basis we find test conditions or the things we could test, because test conditions could come from anything they are usually vague.

Unlike test conditions, test cases are more specific. Test cases will mean entering specific inputs into a system, and will also require some understanding of how the system works. This mean knowing what the system should do and therefore the correct behavior of the system which is also referred to as a Test Oracle. After choosing an input the tester needs to figure out what the expected result is and put it into the test case. Expected results are what appears on the screen in response to the input values as well of changes to data or states and consequences.

The next and last step of the process is to put the test cases into a way that makes sense and the steps needed to run the test. This is a test procedure or test script. These procedures are then put into an execution schedule to determine which procedures are run first and whom runs them.

There are also many different categories of design techniques that I will get into later.

The designing process usually begins with the testers looking through something that they can derive test info from which is also known as a test basis. A test basis can be many different things from an experienced user's knowledge, a business process, system requirements, specifications or the code. Through test basis we find test conditions or the things we could test, because test conditions could come from anything they are usually vague.

Unlike test conditions, test cases are more specific. Test cases will mean entering specific inputs into a system, and will also require some understanding of how the system works. This mean knowing what the system should do and therefore the correct behavior of the system which is also referred to as a Test Oracle. After choosing an input the tester needs to figure out what the expected result is and put it into the test case. Expected results are what appears on the screen in response to the input values as well of changes to data or states and consequences.

The next and last step of the process is to put the test cases into a way that makes sense and the steps needed to run the test. This is a test procedure or test script. These procedures are then put into an execution schedule to determine which procedures are run first and whom runs them.

There are also many different categories of design techniques that I will get into later.

Thursday, October 2, 2014

White Box vs. Black Box testing

Black box and White box testing are mentioned a lot in Chapter 4 of the ISTBQ book. The text book definition of black box in the ISTQB book states that it is "testing either functional or non-functional, without reference to the internal structure of the component or system". Basically Black box testing is when a tester knows what the software does from having access to things like requirements, but has no knowledge as to how the software does these things, or anything about the branching logic. Black box testing tends to involve a lot of exploratory testing as the tester goes through the system to understand how the system works. Techniques of black box testing include but are not limited to equivalence partitioning, and boundary value analysis, the use of data tables, and state transition testing

Meanwhile White box testing is defined as "Testing based on an analysis of the internal structure of the component or system". In other words this type of testing is when the tester knows all the details about not only what the software supposed to do, but how it works and does what it's supposed to do. These kinds of tests allow tester to increase their test coverage by finding new tests that haven't been created. It uses techniques like statement coverage, decision coverage, and branch coverage.

Black box testing is good when the tester is starting to run tests on a software they know little about, while white box testing is good for going more in depth into understanding the system.

Meanwhile White box testing is defined as "Testing based on an analysis of the internal structure of the component or system". In other words this type of testing is when the tester knows all the details about not only what the software supposed to do, but how it works and does what it's supposed to do. These kinds of tests allow tester to increase their test coverage by finding new tests that haven't been created. It uses techniques like statement coverage, decision coverage, and branch coverage.

Black box testing is good when the tester is starting to run tests on a software they know little about, while white box testing is good for going more in depth into understanding the system.

Wednesday, September 24, 2014

Static Vs. Dynamic Testing

In the ISTQB book one of the first things mentioned in Chapter 3 is dynamic testing and static testing.

Dynamic testing involves executing a software with a set of given input values and then checking the outputs values and comparing them to the expected results. Static testing involves using either a set of tools or manually examining software work products such as user guides, test cases, and scenarios but not actually executing them, reviews are actually an example of static testing at work. Basically dynamic testing actually tests the software code while static testing looks through the documents.

Static and dynamic testing techniques are complimentary due to the fact that dynamic testing works on areas static testing does not and visa versa. When they are used together they can greatly improve the quality of testing and help find a lot more defects. Static testing methods are good for finding deviations from standards, missing requirements, design defects, non-maintainable code and inconsistent interface specifications. Dynamic testing is good for finding defects in the code itself, as well as the quality of the software. Static testing is good in the early stages of testing and in reviews while dynamic testing is good for the main executions.

Since a lot of the info in the ISTQB book is about the dynamic aspects of testing I'll go more in depth with static testing According to ISTQB the use of static testing, e.g. reviews, on software work products has various advantages:

Since static testing can start early in the life cycle, early feedback on quality issues can be established, e.g. an early validation of user requirements and not just late in the life cycle during acceptance testing.

By detecting defects at an early stage, rework costs are most often relatively low and thus a relatively cheap improvement of the quality of software products can be achieved.

Since rework effort is substantially reduced, development productivity figures are likely to increase.

The evaluation by a team has the additional advantage that there is an exchange of information between the participants.

Static tests contribute to an increased awareness of quality issues.

Dynamic testing involves executing a software with a set of given input values and then checking the outputs values and comparing them to the expected results. Static testing involves using either a set of tools or manually examining software work products such as user guides, test cases, and scenarios but not actually executing them, reviews are actually an example of static testing at work. Basically dynamic testing actually tests the software code while static testing looks through the documents.

Static and dynamic testing techniques are complimentary due to the fact that dynamic testing works on areas static testing does not and visa versa. When they are used together they can greatly improve the quality of testing and help find a lot more defects. Static testing methods are good for finding deviations from standards, missing requirements, design defects, non-maintainable code and inconsistent interface specifications. Dynamic testing is good for finding defects in the code itself, as well as the quality of the software. Static testing is good in the early stages of testing and in reviews while dynamic testing is good for the main executions.

Since a lot of the info in the ISTQB book is about the dynamic aspects of testing I'll go more in depth with static testing According to ISTQB the use of static testing, e.g. reviews, on software work products has various advantages:

Since static testing can start early in the life cycle, early feedback on quality issues can be established, e.g. an early validation of user requirements and not just late in the life cycle during acceptance testing.

By detecting defects at an early stage, rework costs are most often relatively low and thus a relatively cheap improvement of the quality of software products can be achieved.

Since rework effort is substantially reduced, development productivity figures are likely to increase.

The evaluation by a team has the additional advantage that there is an exchange of information between the participants.

Static tests contribute to an increased awareness of quality issues.

Thursday, September 18, 2014

Formal Review Process

Since Formal reviews seem to be more elaborate and complex than informal reviews I have decided to go more in depth. While informal reviews are more common due to less structure and planning Formal reviews happen less often, usually every few weeks as opposed to informal review that can happen every few days, and have a lot more structure and planning involved.

Typical formal reviews have 6 steps:

1. Planning

This is the beginning of the review process and starts when the author of the software requests a review from the moderator who's job is to lead the inspection. The moderator then has to schedule the start and end dates of the review, and work with the author to come up with test documents including entry criteria. The document can't be too long as most people can't retain a large number of pages of info. during this phase the people who will be involved in the review will be decided on, and from these chosen reviewers the moderator hands out duties and tasks to them.

2. Kick-off

This step is stated by ISTQB to be optional but is highly recommended as it gives the review team members a degree of understanding about the document. During this phase reviewers are given a short intro on the objective of the review and document. The relationship between the review document and other documents used as sources are explained as well.

3. Preparation

This part of the review is where the individual reviewers gather up all of their data and materials they have compiled in testing to work on the document. In this phase the reviewers identify defects, questions and comments and all the issues are recorded. Using checklists in this phase is a way to make the testing more efficient. The efficiency can also be checked by number of pages checked in an hour which varies greatly depending on the number of pages, and the complexity of the software.

4. Review Meeting

This is the actual review, where the review team gets together to talk about the defects that they have found as well as ask questions and collaborate with one another. Bugs talked about in the review is recorded by scribe. The severity of every defect is also recorded by the scribe and there are 3 levels of severity Critical, Major, and Minor

5. Rework

This is the phase where the reviewers try to retest and improve the documents step by step. It's up to the author to decided if the defects need to be fixed. Then the author updates the software and the document.

6. Follow up

This is done by the moderator and is when they determine if the changes done by the author to the software and document are adequate, and the bugs have been removed from the affected areas.

The tasks of a formal review are divided up to include the author 9program writer), a moderator (inspection leader), a scribe (recorder), the reviewers (testers), and a manager (the boss).

Typical formal reviews have 6 steps:

1. Planning

This is the beginning of the review process and starts when the author of the software requests a review from the moderator who's job is to lead the inspection. The moderator then has to schedule the start and end dates of the review, and work with the author to come up with test documents including entry criteria. The document can't be too long as most people can't retain a large number of pages of info. during this phase the people who will be involved in the review will be decided on, and from these chosen reviewers the moderator hands out duties and tasks to them.

2. Kick-off

This step is stated by ISTQB to be optional but is highly recommended as it gives the review team members a degree of understanding about the document. During this phase reviewers are given a short intro on the objective of the review and document. The relationship between the review document and other documents used as sources are explained as well.

3. Preparation

This part of the review is where the individual reviewers gather up all of their data and materials they have compiled in testing to work on the document. In this phase the reviewers identify defects, questions and comments and all the issues are recorded. Using checklists in this phase is a way to make the testing more efficient. The efficiency can also be checked by number of pages checked in an hour which varies greatly depending on the number of pages, and the complexity of the software.

4. Review Meeting

This is the actual review, where the review team gets together to talk about the defects that they have found as well as ask questions and collaborate with one another. Bugs talked about in the review is recorded by scribe. The severity of every defect is also recorded by the scribe and there are 3 levels of severity Critical, Major, and Minor

5. Rework

This is the phase where the reviewers try to retest and improve the documents step by step. It's up to the author to decided if the defects need to be fixed. Then the author updates the software and the document.

6. Follow up

This is done by the moderator and is when they determine if the changes done by the author to the software and document are adequate, and the bugs have been removed from the affected areas.

The tasks of a formal review are divided up to include the author 9program writer), a moderator (inspection leader), a scribe (recorder), the reviewers (testers), and a manager (the boss).

Tuesday, September 9, 2014

Formal Vs Informal Review

Section 3.2 of the ISTQB book is about the test review process. There are 2 types of review, informal review, and formal review. While each one has it's differences they both share the goal of finding defects, and to help fellow testers gain a better understanding of the software, and test requirements, and cases.

Informal reviews are the more simple and commonly used of the 2 types. They can be done at anytime during the early stages of testing, it can only take 2 people to do an informal review, and they can be conducted with little planning, they don't required to be led by a trained moderator. There are many informal testing types but one thing they all share in common is that they are undocumented meaning that no one needs to record a list of defects, and there is no compilation of test cases and defects given out to the review members.

Formal reviews on the other hand are a lot more complex. They are usually done in the later stages of testing and because of that they require more people. They have six main steps:

1. planning

2. kick-off

3. preparation

4. review meeting

5. rework

6. follow up

The formal meetings also have a number of roles that are allocated in the planning phase. The roles are the Moderators, Scribes, Authors, Reviewers, and Managers. Like informal reviews there are different types of formal testing such as technical reviews, and walkthroughs, and not every role is present in every review type, for instance, walkthroughs have the authors explaining the software and leading the review, while technical reviews have trained moderators leading the review instead and the authors are usually absent. Scribes and reviewers however are present in most if not all formal review types.

Informal reviews are the more simple and commonly used of the 2 types. They can be done at anytime during the early stages of testing, it can only take 2 people to do an informal review, and they can be conducted with little planning, they don't required to be led by a trained moderator. There are many informal testing types but one thing they all share in common is that they are undocumented meaning that no one needs to record a list of defects, and there is no compilation of test cases and defects given out to the review members.

Formal reviews on the other hand are a lot more complex. They are usually done in the later stages of testing and because of that they require more people. They have six main steps:

1. planning

2. kick-off

3. preparation

4. review meeting

5. rework

6. follow up

The formal meetings also have a number of roles that are allocated in the planning phase. The roles are the Moderators, Scribes, Authors, Reviewers, and Managers. Like informal reviews there are different types of formal testing such as technical reviews, and walkthroughs, and not every role is present in every review type, for instance, walkthroughs have the authors explaining the software and leading the review, while technical reviews have trained moderators leading the review instead and the authors are usually absent. Scribes and reviewers however are present in most if not all formal review types.

Wednesday, September 3, 2014

The Ed Kit Principle

| When I took a practice test for ISTQB certification I came across the question asking 'The quality and effectiveness of software testing are primarily determined by the quality of the test processes used is stated in' a) Bill Hetzel Principle b) Ed Kit principle c) IEEE 829 d) IEEE 8295 The correct answer was the Ed Kit Principle, and since I didn't know what that was I decided to do some research. Basically the Ed Kit principle states that "The quality and effectiveness of software testing are primarily determined by the quality of the test processes used.". So in other words the way that a tester's testing methods are determines how good the tests they run are. Like the Bill Hetzel Principle the Ed Kit Principle isn't really mentioned by name in the ISTQB software testing book despite it appearing once in a while on online practice tests. However that doesn't mean it isn't present in the book, and in fact has a significant presence. If you have a copy of the book the principle is referenced a lot in at least in section 1.4 Fundamental Test Process. Just remember if you're looking for the principle by name you will not be able to find it, just remember that the principle is about how quality of the test processes determine the quality of the tests. |

Wednesday, August 27, 2014

The Bill Hetzel Principle

After taking a practice test for ISTQB certification I came across a question asking where 'testing must be planned' is stated and the Bill Hetzel Principle was the answer. I wasn't sure what it was, and since this was a chapter 1 quiz I tried to find where it was mentioned in ISTQB book with no success, so I decided to do some research. So I found that Bill Hetzel wrote a book on software testing called 'The Complete Guide to Software Testing' in 1988, and it is still considered a good one from what I can tell.

While I haven't been able to get access to it I found that some of his main testing principles are:

Testing must be planned can be found in section 1.2 'What is Testing'

Testing requires independence can be found as one of the code of ethics, and can be found (more or less) along with the remaining principles in section 1.5 The Psychology of Testing.

The principles of 'Testing is creative and difficult', 'An important reason for testing is preventing errors', and 'testing is risk-based' may not necessarily be found word for word in the book, but some of the stuff the book talks about in CH 1 is mostly the same basic principle.

While I haven't been able to get access to it I found that some of his main testing principles are:

- Complete testing is not possible

- Testing is creative and difficult

- An important reason for testing is to prevent defects

- Testing is risk based

- Testing must be planned

- Testing requires independence

Testing must be planned can be found in section 1.2 'What is Testing'

Testing requires independence can be found as one of the code of ethics, and can be found (more or less) along with the remaining principles in section 1.5 The Psychology of Testing.

The principles of 'Testing is creative and difficult', 'An important reason for testing is preventing errors', and 'testing is risk-based' may not necessarily be found word for word in the book, but some of the stuff the book talks about in CH 1 is mostly the same basic principle.

Wednesday, August 20, 2014

The Test Process Part 2

leaving off from last post I wrote that Test activities can be divided into 5 phases of the testing process:

1. Planning and control

2. Analysis and design

3. Implementation and Execution

4. Evaluating exit criteria and reporting

5. Test closure activities.

I covered the first 3 so now I'll finish the list.

For evaluating exit criteria this is the point in testing when the tester takes what was done in test execution and assesses it against the defined objectives. ISTQB suggests that this should be done for each test level, so we can know when we have done enough testing and can move on to the next level. Also in order to assess the risks of deeming a component, activity or level is complete and can move on we need to come up with a series of exit criteria. Exit criteria should be set and evaluated for each test level. According to ISTQB evaluating exit criteria has the following major tasks:

• Check test logs against the exit criteria specified in test planning

• Assess if more tests are needed or if the exit criteria specified should be changed

• Write a test summary report for stakeholders

The last on the list of test activities is the test closure activities.

During test closure activities, the testers gather up the data from finished test activities from each of the testers involved, this includes checking and filing test ware, and analyzing facts and numbers. We may need to do this when software is delivered. There are a number of reasons why a testing team would close testing, these include the testers getting all the info they needed from testing, the project could be cancelled at anytime, a particular milestone is achieved where we no longer need to continue testing after, or when a maintenance release or update is done. Test closure activities include the following major tasks:

• Check which planned deliverables we actually delivered and ensure all incident reports have been resolved through defect repair or deferral.

• Finalize and archive test ware, such as scripts, the test environment, and any other test infrastructure, for later reuse. This can save time later if the testers have to test a new version of the same software later.

• Hand over testware to the maintenance organization who will support the software and make any bug fixes or maintenance changes, for use in con firmation testing and regression testing. This group may be a separate group to the people who build and test the software; the maintenance testers are one of the customers of the development testers; they will use the library of tests.

• Evaluate how the testing went and analyze lessons learned for future releases and projects. This might include process improvements for the soft ware development life cycle as a whole and also improvement of the test processes.

1. Planning and control

2. Analysis and design

3. Implementation and Execution

4. Evaluating exit criteria and reporting

5. Test closure activities.

I covered the first 3 so now I'll finish the list.

For evaluating exit criteria this is the point in testing when the tester takes what was done in test execution and assesses it against the defined objectives. ISTQB suggests that this should be done for each test level, so we can know when we have done enough testing and can move on to the next level. Also in order to assess the risks of deeming a component, activity or level is complete and can move on we need to come up with a series of exit criteria. Exit criteria should be set and evaluated for each test level. According to ISTQB evaluating exit criteria has the following major tasks:

• Check test logs against the exit criteria specified in test planning

• Assess if more tests are needed or if the exit criteria specified should be changed

• Write a test summary report for stakeholders

The last on the list of test activities is the test closure activities.

During test closure activities, the testers gather up the data from finished test activities from each of the testers involved, this includes checking and filing test ware, and analyzing facts and numbers. We may need to do this when software is delivered. There are a number of reasons why a testing team would close testing, these include the testers getting all the info they needed from testing, the project could be cancelled at anytime, a particular milestone is achieved where we no longer need to continue testing after, or when a maintenance release or update is done. Test closure activities include the following major tasks:

• Check which planned deliverables we actually delivered and ensure all incident reports have been resolved through defect repair or deferral.

• Finalize and archive test ware, such as scripts, the test environment, and any other test infrastructure, for later reuse. This can save time later if the testers have to test a new version of the same software later.

• Hand over testware to the maintenance organization who will support the software and make any bug fixes or maintenance changes, for use in con firmation testing and regression testing. This group may be a separate group to the people who build and test the software; the maintenance testers are one of the customers of the development testers; they will use the library of tests.

• Evaluate how the testing went and analyze lessons learned for future releases and projects. This might include process improvements for the soft ware development life cycle as a whole and also improvement of the test processes.

Monday, August 18, 2014

The test process Part 1

Now that I've posted a few things about the different kinds of tests, and the differences of functional and non-functional testing I will go a little into the testing process. Test activities can be divided into 5 phases of the testing process:

1. Planning and control

2. Analysis and design

3. Implementation and Execution

4. Evaluating exit criteria and reporting

5. Test closure activities.

A test plan is defined by ISTQB as 'A document describing the scope, approach, resources, and schedule of intended test activities.' With a test plan a tester can identify many things including features to be tested, tasks, and who will do the testing tasks, test environments, design techniques entry/exit criteria, choice rationale and risks. Basically a test plan records the entire test process. A related task in this phase is Test monitoring where the status of the project is checked periodically through reports.

In Test Analysis and Design testers take the test objectives and turn them into test conditions and test cases. The activities in this stage of testing goes in the following order as stated by ISTQB:

1. Review Test basis (such as the product risk analysis, requirements, architecture, design specifications, and interfaces), and examine specifications of the software certain types of tests such as black box testing can be designed at this point as well. As we study the test basis, we often identify gaps and ambiguities in the specifications, because we are trying to identify precisely what happens at each point in the system, and this also pre- vents defects appearing in the code.

2. Identify the test conditions based on analysis of test items, specifications, and what we know about their behavior and structure. This gives us a high-level list of what we are interested in testing. In testing, we use the test techniques to help us define the test conditions. From this we can start to identify the type of generic test data we might need.

3. Design test cases using techniques to help select representative tests that relate to particular aspects of the software which carry risks or which are of particular interest, based on the test conditions and going into more detail. I'll create a post later about test design.

4. Evaluate testability of the requirements and system. The requirements may be written in a way that allows a tester to design tests; for example, if the performance of the software is important, that should be specified in a testable way. If the requirements just say 'the software needs to respond quickly enough' that is not testable, because 'quick enough' may mean different things to different people. A more testable requirement would be 'the software needs to respond in 5 seconds with 20 people logged on'. The testability of the system depends on aspects such as whether it is possible to set up the system in an environment that matches the operational environment and whether all the ways the system can be configured or used can be understood and tested. For example, if we test a website, it may not be possible to identify and recreate all the configurations of hardware, operating system, browser, connection, firewall and other factors that the website might encounter.

5. Design the test environment set-up and identify any required infrastructure and tools. This includes testing tools and support tools such as spreadsheets, word processors, project planning tools, and non-IT tools and equipment - everything we need to carry out our work.

In the test implementation and execution phase, we take the test conditions and make them into test cases and set up the test environment, then we actually do the tests. For the test implementation we develop and prioritize our test cases and create test data for those tests. We will also write instructions for carrying out the tests and organize related test cases into test case collections AKA test suites to allow for a more efficient test execution. Then for the test Execution the testers will actually perform the tests they have created, and compare the results with the expected results.

1. Planning and control

2. Analysis and design

3. Implementation and Execution

4. Evaluating exit criteria and reporting

5. Test closure activities.

A test plan is defined by ISTQB as 'A document describing the scope, approach, resources, and schedule of intended test activities.' With a test plan a tester can identify many things including features to be tested, tasks, and who will do the testing tasks, test environments, design techniques entry/exit criteria, choice rationale and risks. Basically a test plan records the entire test process. A related task in this phase is Test monitoring where the status of the project is checked periodically through reports.

In Test Analysis and Design testers take the test objectives and turn them into test conditions and test cases. The activities in this stage of testing goes in the following order as stated by ISTQB:

1. Review Test basis (such as the product risk analysis, requirements, architecture, design specifications, and interfaces), and examine specifications of the software certain types of tests such as black box testing can be designed at this point as well. As we study the test basis, we often identify gaps and ambiguities in the specifications, because we are trying to identify precisely what happens at each point in the system, and this also pre- vents defects appearing in the code.

2. Identify the test conditions based on analysis of test items, specifications, and what we know about their behavior and structure. This gives us a high-level list of what we are interested in testing. In testing, we use the test techniques to help us define the test conditions. From this we can start to identify the type of generic test data we might need.

3. Design test cases using techniques to help select representative tests that relate to particular aspects of the software which carry risks or which are of particular interest, based on the test conditions and going into more detail. I'll create a post later about test design.

4. Evaluate testability of the requirements and system. The requirements may be written in a way that allows a tester to design tests; for example, if the performance of the software is important, that should be specified in a testable way. If the requirements just say 'the software needs to respond quickly enough' that is not testable, because 'quick enough' may mean different things to different people. A more testable requirement would be 'the software needs to respond in 5 seconds with 20 people logged on'. The testability of the system depends on aspects such as whether it is possible to set up the system in an environment that matches the operational environment and whether all the ways the system can be configured or used can be understood and tested. For example, if we test a website, it may not be possible to identify and recreate all the configurations of hardware, operating system, browser, connection, firewall and other factors that the website might encounter.

5. Design the test environment set-up and identify any required infrastructure and tools. This includes testing tools and support tools such as spreadsheets, word processors, project planning tools, and non-IT tools and equipment - everything we need to carry out our work.

In the test implementation and execution phase, we take the test conditions and make them into test cases and set up the test environment, then we actually do the tests. For the test implementation we develop and prioritize our test cases and create test data for those tests. We will also write instructions for carrying out the tests and organize related test cases into test case collections AKA test suites to allow for a more efficient test execution. Then for the test Execution the testers will actually perform the tests they have created, and compare the results with the expected results.

Friday, August 8, 2014

functional vs. non-functional testing

For this post I have decided to get more in depth on the differences of functional and non-functional testing. These are the categories in which most types of testing are divided into.

Functional testing is defined by the ISTQB as "Testing based on an analysis of the specification of the functionality of a component or system." Basically it's testing done to see if the system performs the way that the test specifications and requirements say it should.

Functional testing concentrates on testing activities that verify a specific action or function of the code. These are usually found in the code requirements documentation, although some development methodologies work from use cases or user stories. Functional tests tend to answer the question of "can the user do this" or "does this particular feature work."

An example of a type of functional testing is black box testing which tests the functionality of a software without knowing how it works, testers will only know what it's supposed to do.

ISTQB defines Non-Functional testing as "Testing the attributes of a component or system that do not relate to functionality." The attributes that don't relate to functionality include reliability, efficiency, usability, portability, and maintainability.

Wikipedia explains that "testing will determine the breaking point, the point at which extremes of scalability or performance leads to unstable execution. Non-functional requirements tend to be those that reflect the quality of the product, particularly in the context of the suitability perspective of its users."

Test types that test the attributes of Non functional testing coincide with the names of the attributes themselves and include reliability, efficiency, usability, portability, and maintainability testing.

In conclusion Functional testing tests to see if the software functions the way the client wants, while non-functional testing tests everything else about the system.

Functional testing is defined by the ISTQB as "Testing based on an analysis of the specification of the functionality of a component or system." Basically it's testing done to see if the system performs the way that the test specifications and requirements say it should.

Functional testing concentrates on testing activities that verify a specific action or function of the code. These are usually found in the code requirements documentation, although some development methodologies work from use cases or user stories. Functional tests tend to answer the question of "can the user do this" or "does this particular feature work."

An example of a type of functional testing is black box testing which tests the functionality of a software without knowing how it works, testers will only know what it's supposed to do.

ISTQB defines Non-Functional testing as "Testing the attributes of a component or system that do not relate to functionality." The attributes that don't relate to functionality include reliability, efficiency, usability, portability, and maintainability.

Wikipedia explains that "testing will determine the breaking point, the point at which extremes of scalability or performance leads to unstable execution. Non-functional requirements tend to be those that reflect the quality of the product, particularly in the context of the suitability perspective of its users."

Test types that test the attributes of Non functional testing coincide with the names of the attributes themselves and include reliability, efficiency, usability, portability, and maintainability testing.

In conclusion Functional testing tests to see if the software functions the way the client wants, while non-functional testing tests everything else about the system.

Wednesday, July 30, 2014

Test Types

Last week I wrote about what is testing and how much is enough. This week's post will be about a few types of tests used by testers. There are many types of tests out there but there are a few main ones that I can think of right now including compatibility, regression, acceptance, alpha, beta, and performance testing.

Compatibility testing tests for a software's ability to run consistently well with other applications, and on many different operating systems, or browsers. in other words this is a way to see if a software will work on a PC, Mac, or tablet in the way it is intended to, or if the product is a website if it will work well in IE, Firefox, Safari, Chrome, etc.

Regression testing is done after a tester has submitted bugs and the programmers have come up with a fix for it and the tester goes into the system again and looks for bugs in the area that the original bug was found. This is done to make sure that the improvements have not created any new bugs in the system, and that the solution to the original bug actually solved the problem.

Acceptance testing is done to ensure that the requirements or specifications of the product are met, and in the case of software this can be done through performance testing which is done to see how a software performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage.

Alpha and beta testing are actually interconnected as Alpha testing is what is done at the developer's site by testers to see if it's ready for release. Beta testing is usually done after Alpha testing and is when the company releases a sort of 'demo' version of the software to the public to see if ordinary users can find any additional bugs in the system.

Compatibility testing tests for a software's ability to run consistently well with other applications, and on many different operating systems, or browsers. in other words this is a way to see if a software will work on a PC, Mac, or tablet in the way it is intended to, or if the product is a website if it will work well in IE, Firefox, Safari, Chrome, etc.

Regression testing is done after a tester has submitted bugs and the programmers have come up with a fix for it and the tester goes into the system again and looks for bugs in the area that the original bug was found. This is done to make sure that the improvements have not created any new bugs in the system, and that the solution to the original bug actually solved the problem.

Acceptance testing is done to ensure that the requirements or specifications of the product are met, and in the case of software this can be done through performance testing which is done to see how a software performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage.

Alpha and beta testing are actually interconnected as Alpha testing is what is done at the developer's site by testers to see if it's ready for release. Beta testing is usually done after Alpha testing and is when the company releases a sort of 'demo' version of the software to the public to see if ordinary users can find any additional bugs in the system.

Wednesday, July 23, 2014

What is Testing + how much is enough?

Since I wrote about bugs last post it's natural to move on to the question 'What is Testing?'.

Software testing is an objective investigation into a software or computer system before it is implemented in order to see if it meets all of it's expectations and is ready to be released or not.

The reason the software developers don't test their products is mainly because of the lack of objectivity, and they may not want to find anything wrong with their creation on a subconscious level.

Thought finding bugs is what testers do the tester's job is not to prove that a software is full of problems but if the system meet's all of the client's expectations. No matter how much test a tester will do there will always be bugs that will escape the tester's eyes. The ISTQB book Foundations of Software Testing briefly explains that exhaustive testing is impossible, meaning that it is impossible to detect every single bug for multiple reasons.

I may post an article later on reasons why exhaustive testing is impossible but there are a few main reasons. Main reasons include the fact that the testers will not always be able to test the software in the same way ordinary user would, There isn't enough time or resources to test everything, and that the bugs may exist in areas that the customer didn't feel needed testing as they were unimportant (ex: grammar/spelling).

Finally in terms of how much testing is enough that question has no definite answer and it is in fact mostly determined by how much time and money that the client has given you, and even this can vary greatly from client to client.

Software testing is an objective investigation into a software or computer system before it is implemented in order to see if it meets all of it's expectations and is ready to be released or not.

The reason the software developers don't test their products is mainly because of the lack of objectivity, and they may not want to find anything wrong with their creation on a subconscious level.

Thought finding bugs is what testers do the tester's job is not to prove that a software is full of problems but if the system meet's all of the client's expectations. No matter how much test a tester will do there will always be bugs that will escape the tester's eyes. The ISTQB book Foundations of Software Testing briefly explains that exhaustive testing is impossible, meaning that it is impossible to detect every single bug for multiple reasons.

I may post an article later on reasons why exhaustive testing is impossible but there are a few main reasons. Main reasons include the fact that the testers will not always be able to test the software in the same way ordinary user would, There isn't enough time or resources to test everything, and that the bugs may exist in areas that the customer didn't feel needed testing as they were unimportant (ex: grammar/spelling).

Finally in terms of how much testing is enough that question has no definite answer and it is in fact mostly determined by how much time and money that the client has given you, and even this can vary greatly from client to client.

Thursday, July 17, 2014

Some basic facts on Bugs

Just felt like I should start out the first serious post giving an explanation on what exactly a bug is.

First of a bug is defined by Wikipedia as "an error, flaw, failure, or fault in a computer system or program that causes it to produce an incorrect or unexpected result." There are many kinds of bugs, and they can have varying effects from minor grammar errors, to big bugs that can crash computers and cost tons of money or even lives. A recent example of a major bug was last year when the affordable care act was implemented here in the US and the website that was built came across all sorts of problems due to inadequate testing. This is of course why software testing is seen as an essential job.

Errors are usually the most common type of bug, and are created by either the developers, or by a user entering an incorrect value in the software. It can be easy to remember this by remembering the phrase 'human error'.

Failures, and faults are bugs that are caused by the software itself.

Wikipedia defined failures and faults as "A fault is defined as an abnormal condition or defect at the component, equipment, or sub-system level which may lead to a failure." while "A Failure is the state or condition of not meeting a desirable or intended objective." Basically failures are caused by faults or defects in the system.

Lastly the term 'bug' has apparently been used for over a century to describe mechanical or engineering problems. But when I first started out learning about software bugs I heard that the first computer bug was discovered at Harvard in the late 1940's when computers were giant things that took up entire rooms, and it was a moth that got into the computer. It was a literal 'bug' in the system which is kind of funny, and a lot easier to fix.

Below is a link to an image of this legendary first bug.

http://en.wikipedia.org/wiki/Software_bug#mediaviewer/File:H96566k.jpg

First of a bug is defined by Wikipedia as "an error, flaw, failure, or fault in a computer system or program that causes it to produce an incorrect or unexpected result." There are many kinds of bugs, and they can have varying effects from minor grammar errors, to big bugs that can crash computers and cost tons of money or even lives. A recent example of a major bug was last year when the affordable care act was implemented here in the US and the website that was built came across all sorts of problems due to inadequate testing. This is of course why software testing is seen as an essential job.

Errors are usually the most common type of bug, and are created by either the developers, or by a user entering an incorrect value in the software. It can be easy to remember this by remembering the phrase 'human error'.

Failures, and faults are bugs that are caused by the software itself.

Wikipedia defined failures and faults as "A fault is defined as an abnormal condition or defect at the component, equipment, or sub-system level which may lead to a failure." while "A Failure is the state or condition of not meeting a desirable or intended objective." Basically failures are caused by faults or defects in the system.

Lastly the term 'bug' has apparently been used for over a century to describe mechanical or engineering problems. But when I first started out learning about software bugs I heard that the first computer bug was discovered at Harvard in the late 1940's when computers were giant things that took up entire rooms, and it was a moth that got into the computer. It was a literal 'bug' in the system which is kind of funny, and a lot easier to fix.

Below is a link to an image of this legendary first bug.

http://en.wikipedia.org/wiki/Software_bug#mediaviewer/File:H96566k.jpg

Friday, July 11, 2014

Post number 1!!:D

Greetings aspiring QA software, and game testers!!

Any one whom is reading this post is looking at the first one on this blog!

So this first post is going to be about me and my experience in QA testing. First off I am a 25 year old UC Irvine alumni, and I am an avid marathon runner.

As far as my experience in testing goes, I have been a QA tester at a small testing company in Santa Monica for about a year, and as a kid I took part in some game testing. When I first got the idea of being a tester I had originally found a job posting on craigslist asking for temporary QA testers at Square Enix's office in El Segundo. I felt that it would be an awesome opportunity to play games and get paid to do it so I signed up for an interview right away, didn't get the job, but then my dad suggested to try this small start up company in Santa Monica and after initial resistance to the idea I decided to try it out and I was hired!

When I went through some free mandatory training and gained the basic skills needed to work in the industry. So I got the idea of making this blog when I watched a you tube video where a famous tester named James Bach gave a lecture about testing and how to gain a reputation, and amongst his tips was that a lot of known software testers have a blog. I decided to start this blog to share what I've learned and a few interesting bugs that I come across.

So things that you can expect to see being posted to this blog will be basic facts, and info in QA testing, and bugs that I have/ will find. Also I am currently trying to study for the International Software Testing Qualification Board's (ISTQB) certification exam. It's supposedly not a required thing to be certified but it helps in getting jobs so I will also post some notes I take on the subject.

Enjoy!

Any one whom is reading this post is looking at the first one on this blog!

So this first post is going to be about me and my experience in QA testing. First off I am a 25 year old UC Irvine alumni, and I am an avid marathon runner.

As far as my experience in testing goes, I have been a QA tester at a small testing company in Santa Monica for about a year, and as a kid I took part in some game testing. When I first got the idea of being a tester I had originally found a job posting on craigslist asking for temporary QA testers at Square Enix's office in El Segundo. I felt that it would be an awesome opportunity to play games and get paid to do it so I signed up for an interview right away, didn't get the job, but then my dad suggested to try this small start up company in Santa Monica and after initial resistance to the idea I decided to try it out and I was hired!

When I went through some free mandatory training and gained the basic skills needed to work in the industry. So I got the idea of making this blog when I watched a you tube video where a famous tester named James Bach gave a lecture about testing and how to gain a reputation, and amongst his tips was that a lot of known software testers have a blog. I decided to start this blog to share what I've learned and a few interesting bugs that I come across.

So things that you can expect to see being posted to this blog will be basic facts, and info in QA testing, and bugs that I have/ will find. Also I am currently trying to study for the International Software Testing Qualification Board's (ISTQB) certification exam. It's supposedly not a required thing to be certified but it helps in getting jobs so I will also post some notes I take on the subject.

Enjoy!

Subscribe to:

Posts (Atom)